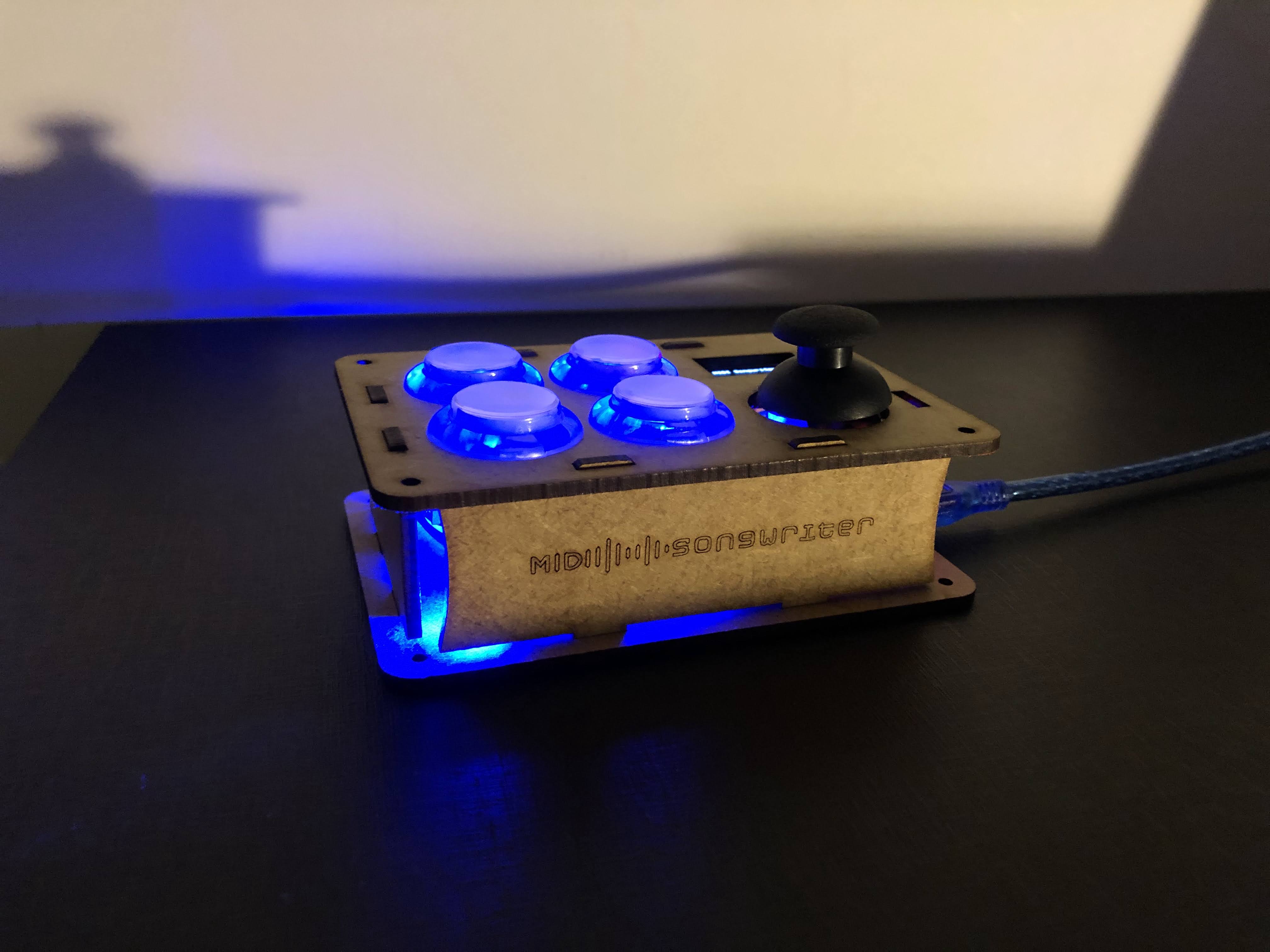

Piece 1 — MIDI Songwriter

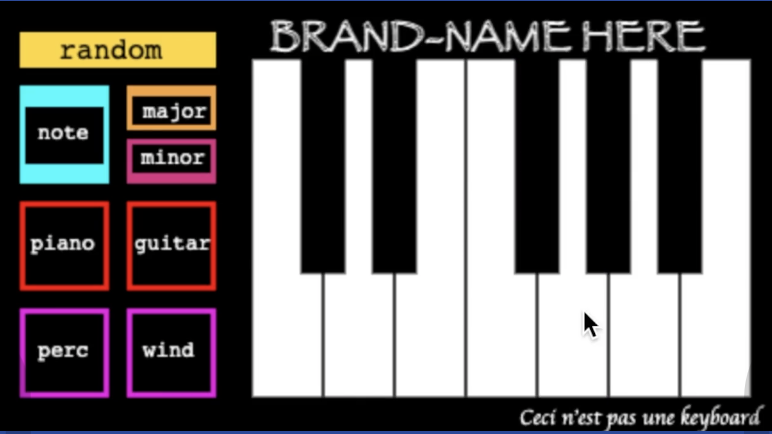

Concept: The MIDI Songwriter is a hardware instrument designed to make harmony intuitive.

Rather than requiring theoretical knowledge, the instrument encodes harmonic

relationships into its physical affordances, allowing non-expert musicians to create coherent musical phrases

even when their gestures are still exploratory or imprecise. This project explores how physical affordances can

preserve agency when users do not yet have explicit harmonic intent.

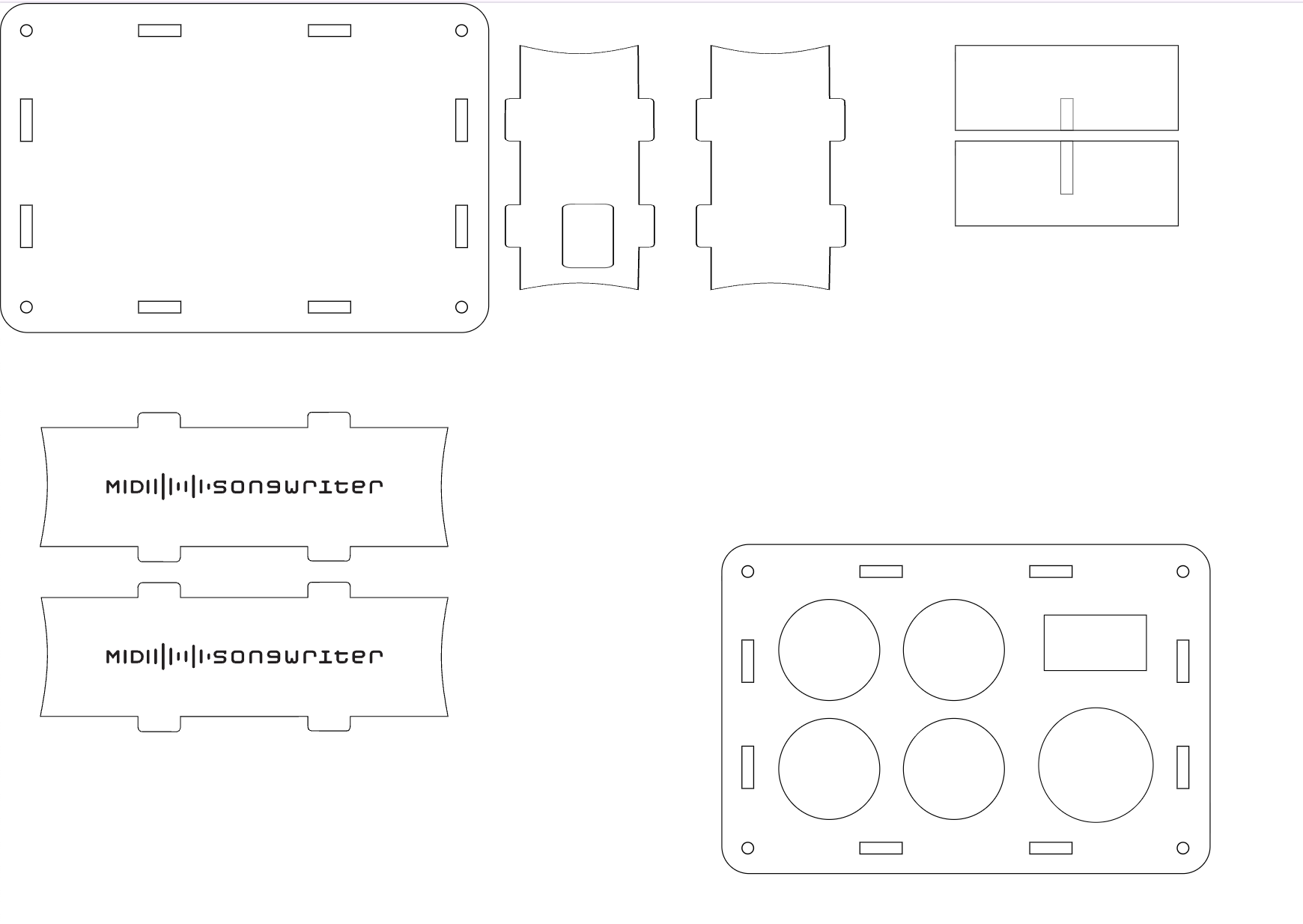

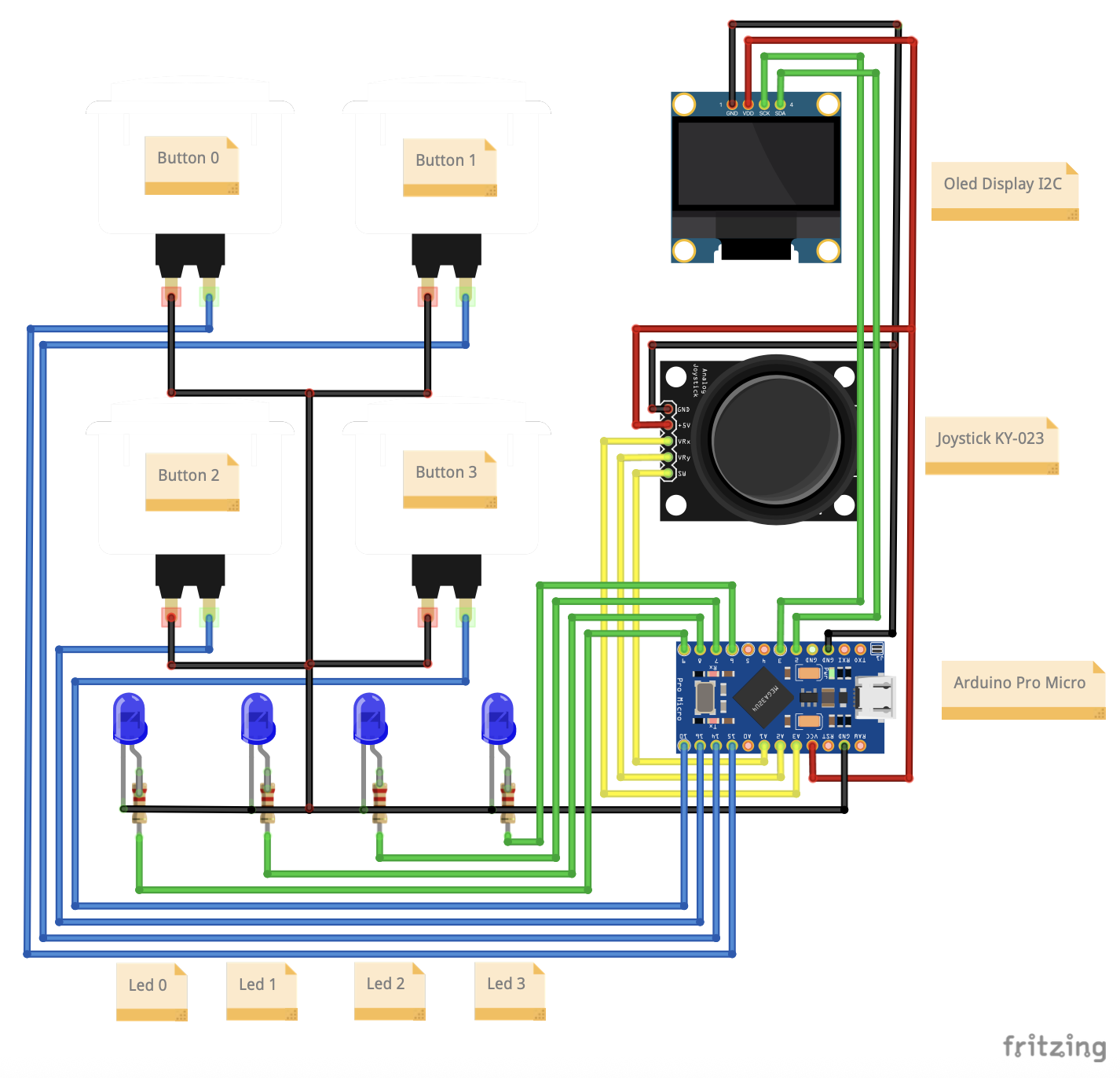

Method & Implementation: Designed and fabricated custom enclosure and tactile interface;

developed embedded firmware for real-time sensor processing and MIDI communication with visual feedback

through RGB LEDs to guide user interaction.